You might come across a situation where your Outlook application fails to connect to the Office 365...

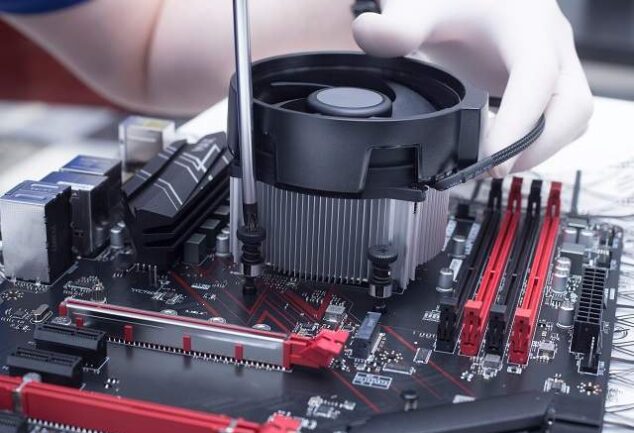

How to Fix Red Light Errors on Motherboard (Step-by-Step Guide)...

Introduction: Understanding Red Light Errors on Motherboard A red light on motherboard can be...

Write SEO Content That Ranks: Engaging Readers & Search...

Key Takeaways Understand the balance between engaging content and SEO principles. Learn how data...

The Future of Digital Communication: Balancing Technology and...

Key Takeaways Digital communication is rapidly evolving, bringing both opportunities and...

How to Use Apple Maps on Web Browser: 3 Powerful Tips for...

How to use Apple Maps on web browser? Let’s find the answer to your question in this article...

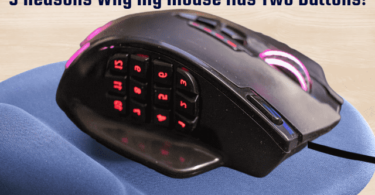

5 Reasons Why My Mouse Has Two Buttons: Unlock the Secrets!

Computer mice are actually input devices that people use with computers. If you want to move the...

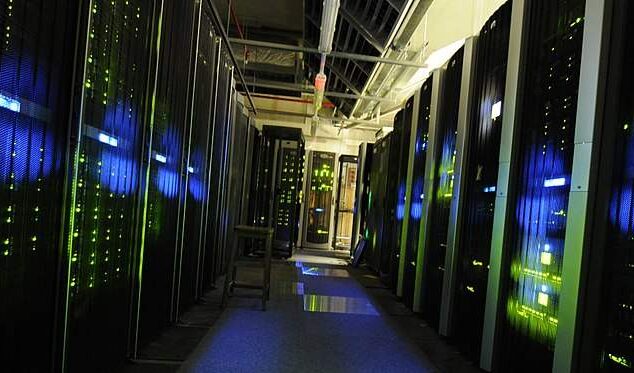

A Beginner’s Guide to Windows VPS Hosting

Finding the right solution for your website or business in web hosting can be daunting. With so...

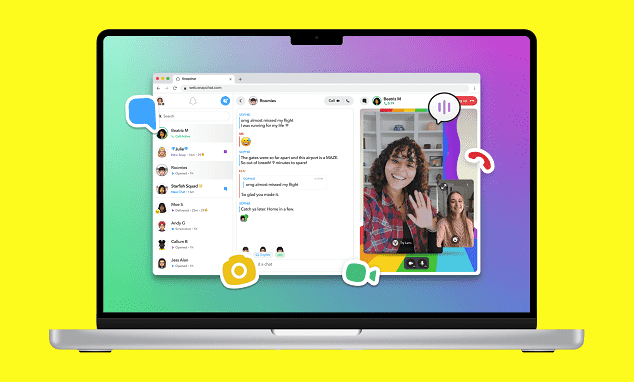

7 Effective Ways to Get Someone Off Your Best Friends List on...

Are you thinking of how to get someone off your best friends list on Snapchat? Previously, after...

7 Incredible Ways to Effortlessly Use Snapchat on PC or Laptop

How to use Snapchat on PC or laptop? Are you wondering about this question? Then, just delve into...

Does a VPN Slow Down Your Internet?

Introduction Understanding VPNs: A Brief Overview In this digital landscape, ensuring online...

Vertex AI

The Google Cloud AI Platform team has been creating a unified view of the ML landscape for the past...

How to Copy and Paste on Chromebook?

Chromebooks have gained so much popularity due to their price factor. These devices are faster...