When talking about processors, it counts cache as well as the transistors and frequencies. When we discuss CPU or central processing units, we usually hear about the term cache memory. But no one gives enough attention to the CPU cache memory numbers. This article will inform you about CPU cache, which is a hardware cache that the CPU uses to decrease the average time of accessing data from the main memory.

Basically, a cache is considered a smaller, faster memory. It is located near a processor core and mainly stores data copies from main memory locations, which are frequently used. This memory is mainly implemented with SRAM. At the same time, you should know that other kinds of cache can be found, like the LTB ( translation lookaside buffer), which is considered part of MMU.

What is CPU Cache Memory?

A CPU memory cache refers to a fast and smaller kind of memory.

In this regard, there is a fact you need to know that memory speed and processor speed was low in computing in the previous days. But the speed of processors has increased over time since the 1980s. The system memory could not match the CPU speed, and thus CPU cache memory was born, a very ultrafast memory.

You should know that there are many memory types inside the PC. Hence, a primary storage such as a hard disk or SSD exists, and you can use it to store plenty of data.

RAM stands for Random Access Memory which works quicker than primary storage. Both computers and programs inside this use the memory to store frequently accessed data. It helps to keep actions fast.

The CPU comes with faster memory units within itself, and we know it as a CPU memory cache. You can find a hierarchy that relies upon the operational speed of PC memory. In this hierarchy, the CPU cache ranks at the top. It is a default in the CPU, meaning a part of it.

Computer memory is also available in different types. While system RAM is dynamic, cache memory is static. With the help of static type, you can hold data, and you don’t need to refresh constantly. It isn’t like dynamic RAM, which usually makes SRAM perfect for cache memory. Several new desktop, server, and industrial CPUs come with a minimum of three independent caches.

Instruction cache: It helps to speed up executable instruction fetch.

Data cache: It helps to increase the speed of data fetch and store. The data cache is set up as a hierarchy of L1, L2, etc., cache levels.

Translation lookaside buffer (TLB): It increases the translation speed of a virtual address to a physical address for executable instructions and data. You can use a single TLB that will permit you to access both instructions and data. In addition, you may use a separate Instruction TLB (ITLB) and data TLB (DTLB). However, you should know that this cache belongs to MMU or the memory management unit. There is no direct connection to the CPU caches.

How Does CPU Cache Work?

Remember that in every computer, programs are designed like a set of instructions interpreted by the CPU. As soon as you run a program, the instructions go to the CPU from the primary storage. Hence the hard drive is one of the example of primary storage. In this case, the memory hierarchy matters the most.

You should know that the data gets loaded into the random access memory first and sent this to the CPU. The best thing is nowadays; the CPU can carry many instructions each second. If you want to use the CPU’s power completely, the CPU will require access to super-fast memory.

After that, the task of the memory controller is to take data from random access memory and send this to the CPU cache. You can find the controller available on the CPU while the Northbridge chipset is on the motherboard.

Next, the memory cache helps you to carry out the data and make it back & forth within the CPU. Hence, you can find the hierarchy within the CPU cache.

The Levels of CPU Cache Memory: L1, L2, and L3

L1, L2, and L3 Memory Explained

It is categorized into three “levels”— L1, L2, and L3. The hierarchy depends on the speed and the cache size. So, can you see the CPU cache size making a difference in performance?

L1 Cache:

Level 1 cache is the quickest memory that can be found in a computer system. L1 cache is the type of cache that the CPU generally needs when finishing a certain task. Its cache size relies on the CPU. A few popular High-class consumer CPUs currently come with a 1MB L1 cache. In this case, you should know that Intel i9-9980XE is a high-end CPU. In addition, a few server chipsets, such as Intel’s Xeon range, have a 1-2MB L1 memory cache.

You can not find any “standard” L1 cache size, so it is essential to check the CPU specs to determine the correct size of this memory before buying. It is divided into the instruction cache and the data cache. The task of the instruction cache is to handle information about the work that the CPU has to do. Hence the data cache holds the data where the operation will be performed.

L2 Cache:

This type of cache works slower than the Level 1 cache, but its size is larger. The previous cache measures in kilobytes, while this one can measure in megabytes. For instance, the L1 cache for 384KB is available on AMD’s highly rated Ryzen 5 5600X. Besides, it includes a 3MB L2 cache with a 32MB L3 cache.

The size of the L2 cache depends on the CPU, and based on that, it will vary. Generally, the size varies between 256KB and 8MB. Currently, the new CPUs have over a 256KB L2 cache, which you need to consider small. On the other hand, there are famous modern CPUs with an enormous L2 memory cache exceeding 8MB.

Regarding speed, the L1 cache wins the race. However, the L2 cache is still faster than the system RAM. The L1 memory cache is 100 times faster than the RAM, whereas L2 is around 25 times faster.

L3 Cache:

Previously, it was only available on the motherboard. In earlier days, CPUs were single-core processors. But currently, in CPUs, this cache can be massive. Top-end consumer CPUs have these caches of up to 32MB. In addition, there are a few server L3 caches that have up to 64MB.

Although it is the biggest, it is the slowest cache memory unit. In modern CPUs, there are many L3 caches. But both L1 and L2 cache can be found on chip’s each core. Whereas the L3 cache can be considered as more similar to a general memory pool which the entire chip can use. You should know how the level 1 cache is split into two, whereas the level 2 and level 3 are higher, respectively.

How Much CPU Cache Memory Do You Need?

It will be better for you if you have more CPU cache memory. Nowadays, you can see the current CPUs with more CPU cache memory than older generations with quicker cache memory. Hence, you may learn how you can compare CPUs effectively. A Lot of information is available over there. So, you should know the procedure of comparing and contrasting CPUs. It can help you to make a correct decision.

How Does Data Move Between CPU Memory Caches?

Usually, the data flow is towards the L3 cache from the RAM. After that, it goes to L2 and, lastly, L1. If the processor finds any data to carry out an operation, its first job is to look for this in the L1 cache. The condition is known as a cache hit when the CPU finds this. After that, it goes to level 2 and level 3 to find this.

But suppose the processor can’t find the data in any memory caches. If it is the case, the processor will attempt to access this from the system RAM. Once it happens, the incident will be called a cache miss.

You can use the cache to increase the speed of the back-and-forth of information between the CPU and the main memory. Latency is the time at which we need to access data from memory.

While the level 1 cache memory features the lowest latency, level 3 features the highest. However, you should know that this memory is the quickest to the core. Remember that its latency will increase if any cache miss is there because the CPU must retrieve the data from the system memory.

After that, latency reduces continuously because the PC becomes faster. Remember that low-latency DDR4 RAM and super-fast SSDs make the system quicker by cutting down latency. The speed of system memory is also crucial.

Does CPU cache Matter for Gaming?

How much CPU cache do I need for gaming? For gaming, it can make a huge difference. Some factors are vital traditionally for gaming, including single-threaded performance, instructions per clock (IPC), and clock speed. However, it is clear that cache is a crucial factor in concealment between AMD and Intel.

It is crucial for gaming due to the style of design today. In current times, games come with a lot of randomnesses. It indicates that the CPU must execute simple instructions.

The graphics card must wait on the CPU if there isn’t enough cache. The reason is that the instructions pile up, causing a bottleneck. A difference can be seen made with AMD’s 3D V-Cache technology in Far Cry 6.

Nowadays, there is a trend toward more cache for gaming. For example, AMD’s Ryzen 3000 CPUs come with twice as much cache as the L3 cache of earlier generations. As a result, these were quicker for gaming. At the time of the launch of the Ryzen 5000, AMD was unable to add more cache. However, it unified two blocks of L3 cache within the CPU. As a result, latency decreases, and AMD helps increase gaming performance.

Intel has continued playing catch-up with AMD. Alder Lake CPUs, which is its current generation, come with up to 30MB of L3 cache less than most Ryzen CPUs. However, these have many L1 and L2 caches. But remember that the drawback of Intel in L3 capacity never indicates that the speed of Ryzen 5000 CPUs is more than this in gaming.

Hence you need to know that the race will continue with the future Ryzen 7000 and Raptor Lake CPUs. Ryzen 7000 must have two times more than the L2 cache of Ryzen 5000. So you can see more CPUs that use V-Cache. In addition, you should know that the company Intel never comes with its V-Cache version. However, Raptor Lake is expected to come with more L3 cache than Alder Lake.

Associativity:

It is the placement policy based on which the copy of a specific entry of the main memory goes. Suppose the placement policy can select any type of entry in the cache so that it can hold the copy. Hence, we call this cache fully associative. On the other hand, we call a cache direct-mapped when every entry in the main memory goes in one place only in the cache.

Several caches implement a compromise where every entry in the main memory goes to only one place among the N places in the cache. We know it as the N-way set associative. For instance, the L1 data cache is a two-way set associative in an AMD Athlon. It indicates that specific locations in the main memory may be cached in any of these two locations in the level-1 data cache.

These are a few types of cache including:-

- Direct mapped cache

- Two-way set associative cache

- A two-way skewed associative cache

- Four-way set-associative cache

- Eight-way set-associative cache

- 12-way set associative cache, similar to eight-way

- Fully associative cache

Direct-mapped cache:

Every location in the cache organization can go in only a single entry in the cache. That’s why we can call a direct-mapped cache a “one-way set associative” cache because there doesn’t exist any placement policy. As a result, you can’t evict the contents of any Cache entry. It indicates that two locations knock out each other if these map to the same entry. While this cache is simpler, it is also larger than an associative one offering comparable performance.

Two-way set associative cache:

This scheme offers a unique advantage. You don’t need to include tags stored in the cache to the main memory address. Hence, the index of cache memory implies the main memory address. In this regard, one thing you should know is that these cache tags have less number of bits. Therefore, these need lesser transistors. Besides, these consume less space on the processor circuit board or the microprocessor chip. You can read and compare them. LRU is simple because it requires only one bit to store for every pair.

Speculative execution:

It is an excellent benefit of a direct-mapped cache. As soon as you have computed the address, you will get to know about the one cache index containing a copy of that location. Then, you can read the entry. The processor keeps working with the data before the checking ( if the tag matches the requested address) ends.

The processor uses cached data before the tag match ends. People can apply the idea to associative caches. Hint, which is a tag’s subset, lets you use this to choose any of these cache entries mappings to the requested address. After that, you need to parallel the entry that which hint chooses by checking the full tag. The hint technique is suitable most when you use this in the context of address translation.

Two-way skewed associative cache:

In this case, for way 0, the index remains direct. Whereas the index for way 1 can be formed with a hash function. Remember that if you use a good hash function, it will have the property which addresses that rivalry with the direct mapping that doesn’t have any tendency to conflict while mapping with the hash function. However, there is a drawback of additional latency from calculating the hash function. If it comes to loading a new line and evicting an old line, you can not easily determine the existing line used least recently as the new line can conflict with data at different indexes in every way. Generally, people do LRU tracking, in this case, on a per-set basis. However, these come with more benefits than conventional set-associative ones.

Pseudo-associative cache:

Remember that a true set-associative cache always checks all methods simultaneously with the help of a content-addressable memory. This cache can test all methods one by one. A hash-rehash and a column-associative cache are two examples. It works as a direct-mapped cache but contains a much lower conflict miss rate compared to the direct-mapped cache.

Multicolumn cache:

Generally, a set associative cache comes with fewer bits than a direct-mapped cache. Hence, set-associative contains these for the set index used to map a cache set. There are many ways or blocks, like two & four blocks for a 2-way set associative cache and a 4-way set associative cache. When this type of cache is compared with a direct mapped cache, you can find the unused cache index bits becoming part of tag bits. For example, if there is a 2-way set associative cache, it will contribute 1 bit to the tag. On the other hand, if there is a 4-way set associative cache, it will contribute 2 bits to the tag.

Its basic idea is to use the set index, which will be used for mapping to a cache set. The set index works like a conventional set associative cache. For example, if a 4-way set associative cache is there, it will use two bits to index, and these are as follows:- way 00, way 01, way 10, and way 11, respectively. We call the double cache indexing a “major location mapping,” where the latency is the same as direct-mapped access.

It has a high bit ratio for high associativity. Besides, it has lower latency than a direct-mapped cache, as the hit percentage in major locations is high. In this regard, you should know that you can find the concept of the major and selected location in the multicolumn cache, which is used in different cache designs in ARM.

Cache miss:

So what is a cache miss? It means an attempt that failed while reading or writing data in the cache. Due to which, you can experience main memory access with longer latency. Three cache misses are there, including:

- Instruction read miss,

- Data read miss, and

- Data write miss.

You can experience the longest delay due to the cache read misses from an instruction cache. The reason is that the processor must wait until the instruction is fetched from the main memory.

You will face a smaller delay due to cache read misses from a data cache. The reason is that independent instructions on the cache read may be issued. Therefore, it can continue execution until you return the data from the main memory. So, to start execution, use the dependent instructions.

The shortest delay is caused due to the cache write misses to a data cache. It is because you can queue the write cache. However, some limits exist that you can find when you are going to execute subsequent instructions.

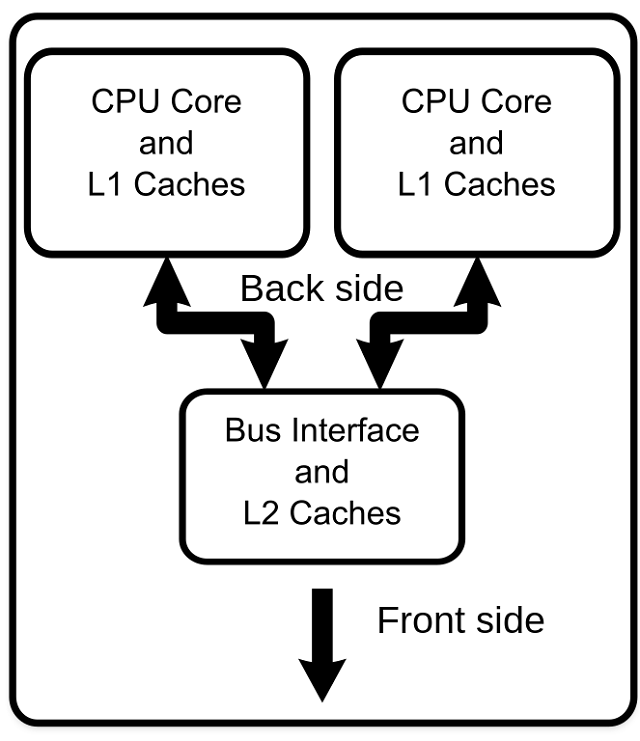

Cache hierarchy in a modern processor

The latest processors come with many interacting on-chip caches. These factors can specify the operation of a specific cache, including —

- the cache size,

- the cache block size,

- the number of blocks in a set,

- the cache set replacement policy, and

- the cache writes policy (write-through or write-back).

All cache blocks are on the same side and come with the same associativity, indicating the “lower-level” caches that we call Level 1 cache. In this case, you can find smaller block sizes, smaller blocks, fewer blocks in a set, etc. On the other hand, the Level 2 caches and above come with more blocks. These have larger block sizes also, where you can find more blocks in a set. One can determine the cache entry replacement policy by the cache algorithm that the processor designers implement. However, you need to know that different algorithms are required for several workloads.

Specialized caches:

The pipelined central processing unit can access memory from many points in the pipeline, including—

- instruction fetch,

- virtual-to-physical address translation, and

- data fetch

The motive behind this design is to use several physical caches for every point. Thus, the pipeline finishes at least three separate caches, including instruction, TLB, and data. Every cache is designed for its specific roles.

Victim cache:

It holds blocks that are evicted from a CPU cache upon replacement. This one lies between the main cache and the refill path. It can hold only the blocks of data evicted already in the past from the primary cache. This fully associative cache can decrease the number of conflicts misses.

Multiple programs are used generally, and these don’t have to use associative mapping for all accesses. However, remember that high associativity is essential even for a small fraction of the program’s memory accesses. This cache can exploit the property by offering high associativity to these accesses.

Trace cache:

We know this one as the execution trace cache that was available in the Intel Pentium 4 microprocessors. It is a mechanism used to boost the instruction fetch bandwidth and reduce power consumption.

You should know that it can store instructions after their decode or retirement. Usually, you can use instructions to trace caches in groups. It represents any one of these — individual basic blocks or dynamic instruction traces.

Write Coalescing Cache (WCC):

This one belongs to the L2 cache in AMD’s Bulldozer microarchitecture. Hence, WCC helps to decrease the number of writes to the L2 cache.

Micro-operation (μop or uop) cache:

We call this μop cache, uop cache, or UC. It can store micro-operations of decoded instructions which you can receive directly from the instruction decoders or instruction cache. Suppose there is an instruction that must be decoded. In such cases, it has to undergo checking for the decoded form so that you can reuse it if cached. Whether you can’t find this, ensure that the instruction is decoded and cached.

Although it features several similarities with a trace cache, it is simpler and can offer improved power efficiency. As a result, this cache is suitable for implementations on devices that use batteries to work.

Branch target instruction cache:

This cache can hold the first few instructions at the destination of a branch. Low-powered processors use this type of cache. However, these don’t need a normal instruction cache. The reason is that the memory system can provide instructions satisfying the central processor unit. However, remember that it applies only to consecutive instructions in sequence.

Smart cache:

This one is considered a level 2 or 3 caching processes for several execution cores. With the help of this one, it is possible to share the actual cache memory between the cores of a multi-core processor. The shared cache makes the sharing process quicker among different execution cores.

Multi-level caches:

The basic trade-off between cache latency and hit rate is one of the problems also. Remember that bigger caches come with better hit rates but extended latency. If you want to address the problem, several PCs use many cache levels where the little, & faster caches get back up from the larger & slower caches. However, the fastest caches must be checked for multi-level cache operating. If it hits, the processor can run at a higher speed. If the smaller cache misses, then the level 2 cache must be checked. These checks need to be done before accessing the external memory. In this case, you need to know that the advantage of Level 3 and level 4 caches relies upon the application’s access patterns.

Here, we will mention the instances of products incorporating L3 and L4 caches. Let’s check it:-

Alpha 21164 (1995): 1 to 64 MiB off-chip L3 cache.

IBM POWER4 (2001): It comes with off-chip L3 caches of 32 MiB per processor.

Itanium 2 (2003): A 6 MiB unified level 3 (L3) cache on-die.

Tulsa: Xeon MP product of Intel has 16 MiB of on-die L3 cache. It is shared between 2 processor cores.

AMD Phenom II (2008): up to 6 MiB on-die unified L3 cache.

Intel Core i7 (2008): An 8 MiB on-die unified L3 cache.

The Future of CPU Cache Memory:

The design of cache memory is evolving because the memory is faster and cheaper. Smart Access Memory and the Infinity Cache are the most effective innovations of AMD. Hence, you need to know that both features boost the PC performance.

The bottom line:

You might have thought that having excessive cache memory is beneficial. Suppose you are going to buy a processor. The product page says the amount of default level 3 cache memory in such cases. If you use higher-end modules, these can have some more megabytes of CPU cache memory. Hence, you need to know that L1 and L2 caches are considered smaller yet faster caches that the CPU can try and hit before checking for data in the Level 3 cache.

Do you think more cache can offer more Frames Per Second (FPS) in games or not? Do these provide improved performance in other applications? Hence, you need to know that it relies on the application. The performance may boost on CPUs with bigger caches. Whether you are purchasing a high-end processor, you require better overclocking potential or more cores. Generally, that processor will have more cache also. Whether you are willing to have the high-end silicon, ensure that you have checked the amount of cash available in your wallet.

Frequently Asked Questions:

- What does a CPU cache do?

We know cache as a temporary memory. Its official name is “CPU cache memory.” The PC’s chip-based cache feature allows your computer to access more information faster than access from the primary hard drive of the PC.

- What is CPU cache vs. RAM?

It is the default in the CPU, or you can find this on an adjacent chip. The time required to access memory is ten to a hundred times faster than RAM. Hence, it needs only a few nanoseconds.

- Is more cache a better CPU?

CPU cache has to keep on computing in those areas where it can access memories to store data. So if your PC has a bigger cache, it performs more quickly. The reason is that it can take less time to retrieve information from its CPU cache memory compared to the memory you have to fetch from remote storage devices, including hard drives and RAM.